MAP-Neo:

MAP-Neo:

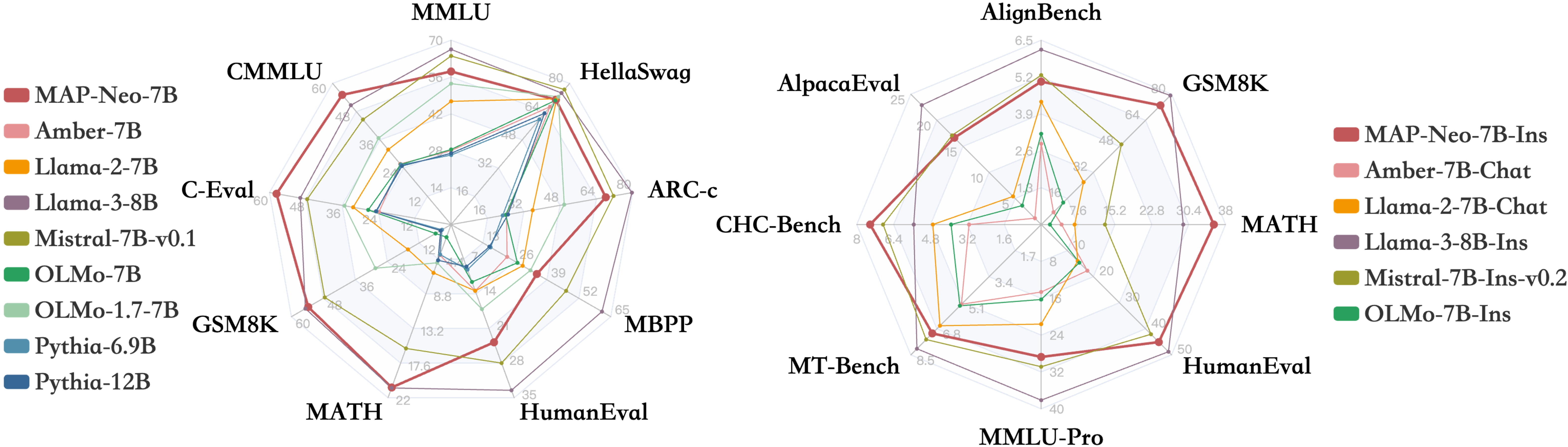

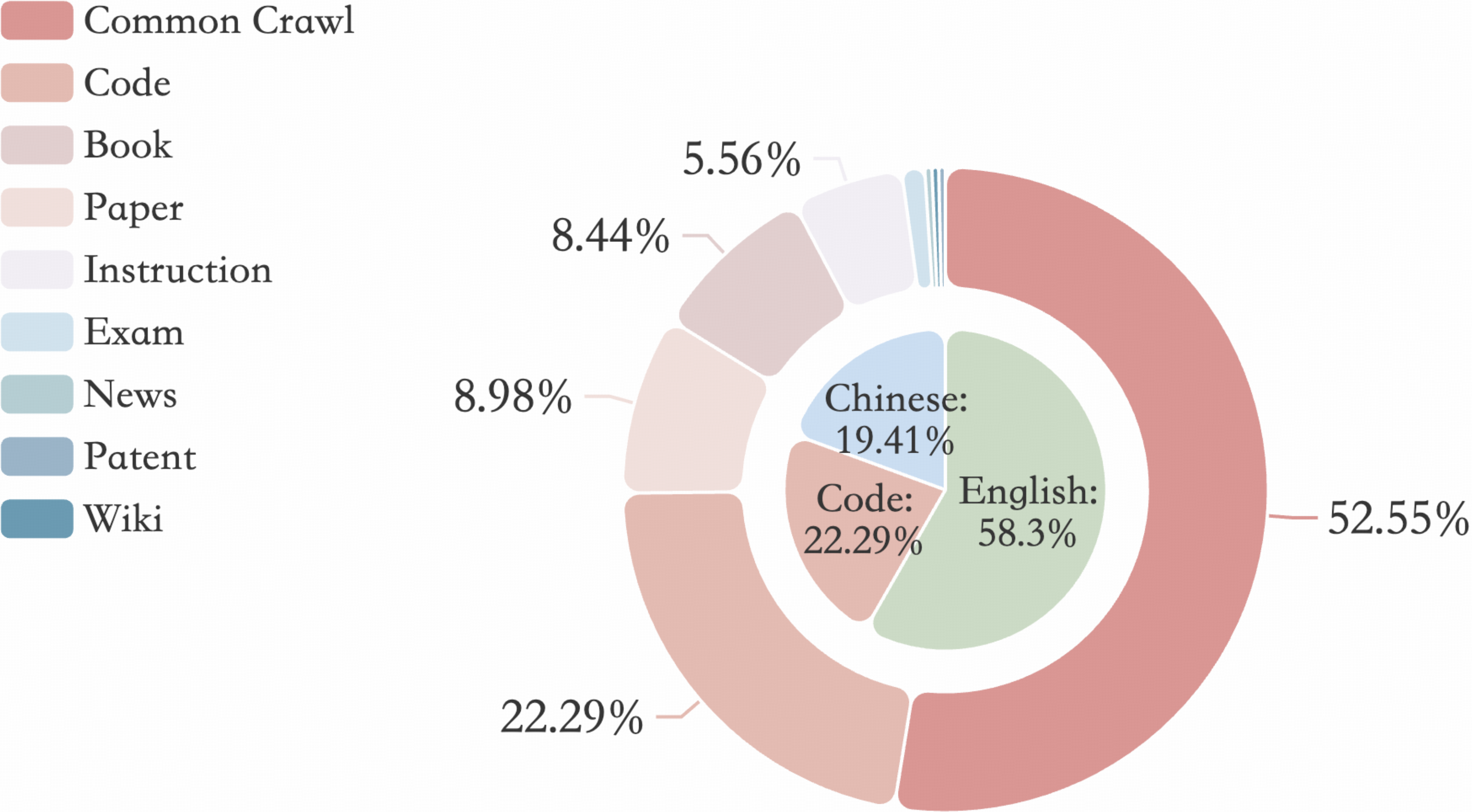

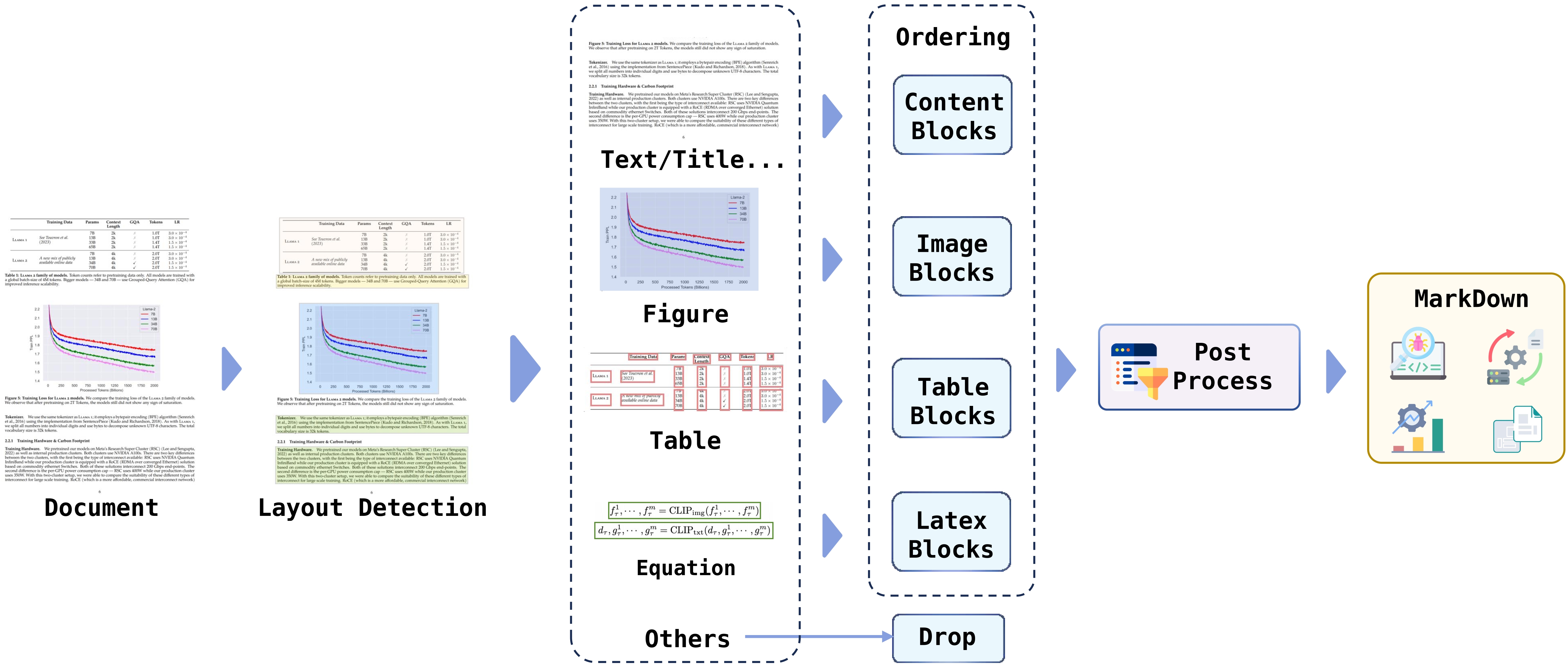

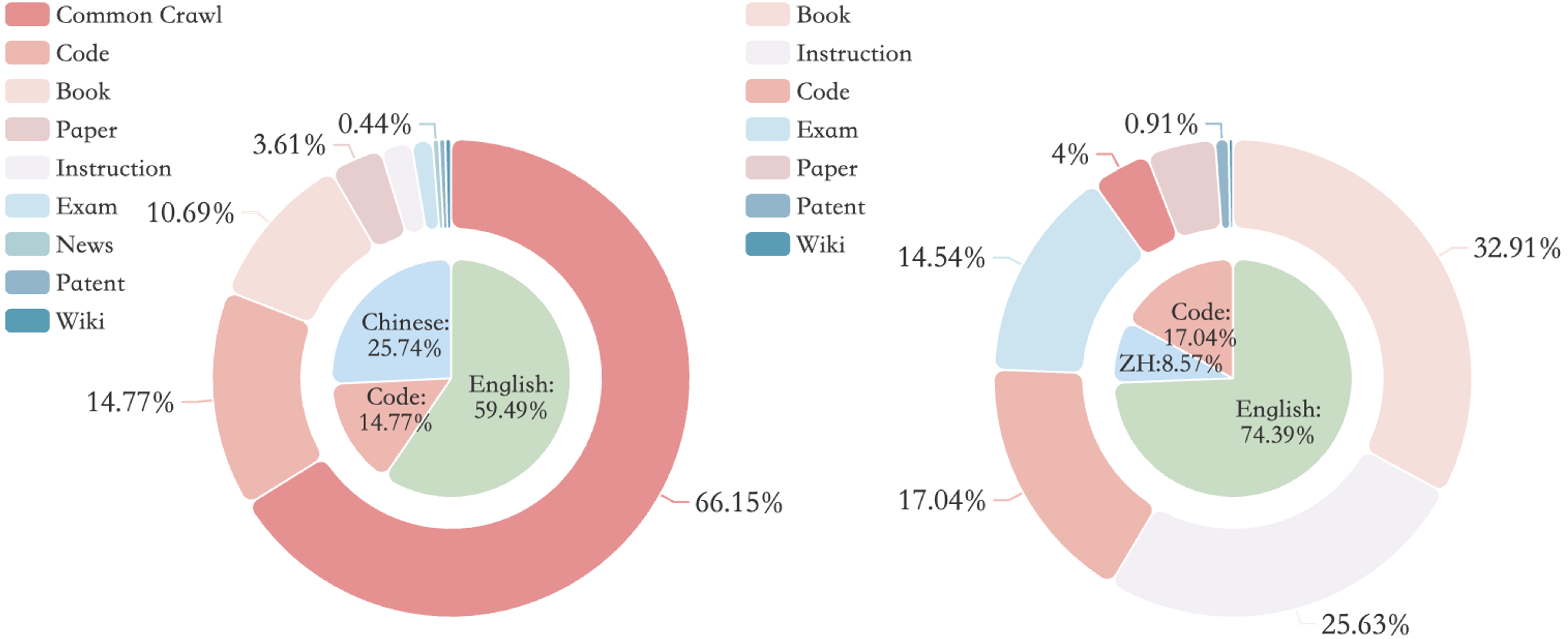

Large Language Models (LLMs) have made great strides in recent years to achieve unprecedented performance across different tasks. However, due to commercial interest, the most competitive models like GPT, Gemini, and Claude have been gated behind proprietary interfaces without disclosing the training details. Recently, many institutions have open-sourced several strong LLMs like LLaMA-3, comparable to existing closed-source LLMs. However, only the model's weights are provided with most details (e.g., intermediate checkpoints, pre-training corpus, and training code, etc.) being undisclosed. To improve the transparency of LLMs, the research community has formed to open-source truly open LLMs (e.g., Pythia, Amber, OLMo), where more details (e.g., pre-training corpus and training code) are being provided. These models have greatly advanced the scientific study of these large models including their strengths, weaknesses, biases and risks. However, we observe that the existing truly open LLMs on reasoning, knowledge, and coding tasks are still inferior to existing state-of-the-art LLMs with similar model sizes. To this end, we open-source MAP-Neo, a highly capable and transparent bilingual language model with 7B parameters trained from scratch on 4.5T high-quality tokens. Our MAP-Neo is the first fully open-sourced bilingual LLM with comparable performance compared to existing state-of-the-art LLMs. Moreover, we open-source all details to reproduce our MAP-Neo, where the cleaned pre-training corpus, data cleaning pipeline, checkpoints, and well-optimized training/evaluation framework are provided. Finally, we hope our MAP-Neo will enhance and strengthen the open research community and inspire more innovations and creativities to facilitate the further improvements of LLMs.

| Discord | |

|

|

@article{zhang2024mapneo,

title = {MAP-Neo: Highly Capable and Transparent Bilingual Large Language Model Series},

author = {Ge Zhang and Scott Qu and Jiaheng Liu and Chenchen Zhang and Chenghua Lin and Chou Leuang Yu and Danny Pan and Esther Cheng and Jie Liu and Qunshu Lin and Raven Yuan and Tuney Zheng and Wei Pang and Xinrun Du and Yiming Liang and Yinghao Ma and Yizhi Li and Ziyang Ma and Bill Lin and Emmanouil Benetos and Huan Yang and Junting Zhou and Kaijing Ma and Minghao Liu and Morry Niu and Noah Wang and Quehry Que and Ruibo Liu and Sine Liu and Shawn Guo and Soren Gao and Wangchunshu Zhou and Xinyue Zhang and Yizhi Zhou and Yubo Wang and Yuelin Bai and Yuhan Zhang and Yuxiang Zhang and Zenith Wang and Zhenzhu Yang and Zijian Zhao and Jiajun Zhang and Wanli Ouyang and Wenhao Huang and Wenhu Chen},

year = {2024},

journal = {arXiv preprint arXiv: 2405.19327}

}